- CRO

Why Most A/B Tests Fail (and What to Do Differently)

10 Nov 2025

A/B testing looks scientific on the surface. Two versions, one audience, one winner. The method feels clean and precise, yet most tests never create lasting improvement. They deliver noise instead of knowledge.

At DIGITXL, we see this pattern every day. Teams run experiments but struggle to connect the results with real business outcomes. The problem rarely lies in the tool or the design, it’s in the structure that surrounds the work.

Conversion rate optimisation succeeds when testing becomes a process of disciplined learning. Below is a practical framework built from projects that have worked, and from the ones that taught us more than success ever did.

1. Strategy: Defining the Right Question

A strong experiment begins with purpose. Every test needs a question worth answering and a reason grounded in data or behaviour.

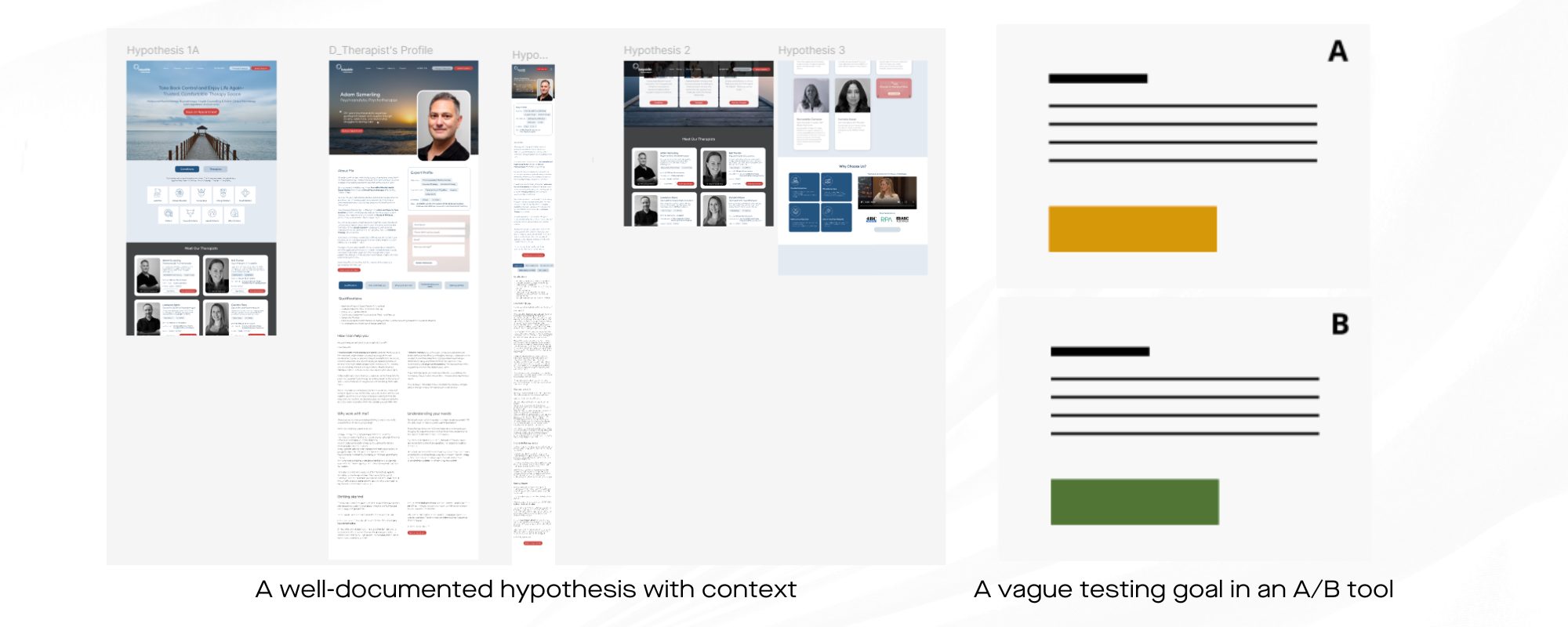

Start with one clear business goal: growth in qualified leads, completed purchases, or product engagement and build the hypothesis from that point. Each statement should include evidence and expectation: “We believe changing X will improve Y because Z.”

Before writing code or copy, map the insight chain: objective → user problem → hypothesis → measurement plan.

This step aligns curiosity with revenue and sets a benchmark for what “success” means.

2. Design: Translating the Hypothesis into Experience

Effective test design depends on how well each variation controls for change. One variation equals one deliberate change. Every additional difference introduces bias.

Create a matrix of control versus variant and highlight what’s changing. Keep layouts consistent so behavioural shifts reflect genuine preference rather than surprise.

Run a QA review before launch. Test accessibility, visual hierarchy, and copy tone. The design’s job is to isolate evidence, not distract from it.

3. Data & Setup: Building Integrity Before Launch

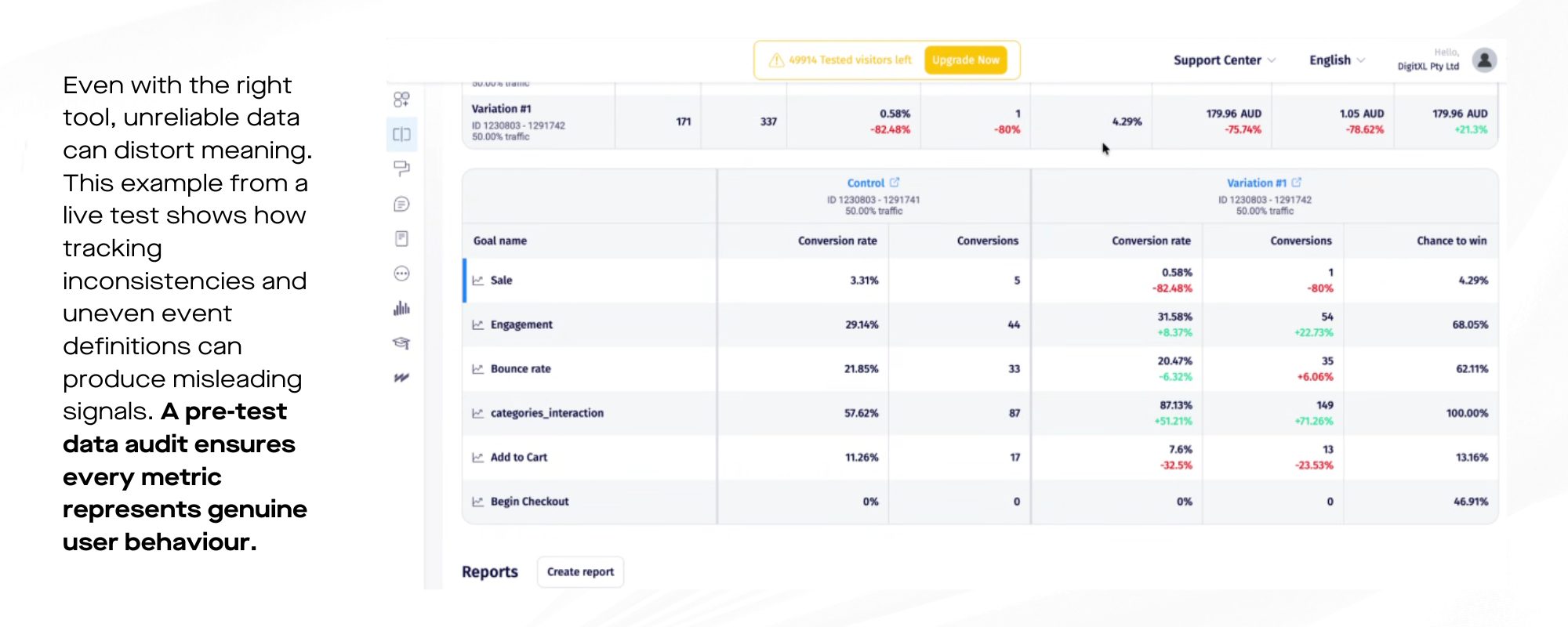

Reliable data determines whether results mean anything. Confirm that tracking scripts fire correctly, internal traffic is excluded, and events align with your analytics structure.

Define a realistic sample size before deployment. Use statistical calculators to ensure enough visitors will reach confidence. Even splits and verified tagging prevent painful re-runs.

A short pre-test audit using live traffic can reveal setup errors early and save entire sprints of wasted effort.

4. Duration & Discipline: Running Tests Long Enough

Patience is underrated in experimentation. Stopping a test when early numbers look promising creates false positives.

Set minimum runtimes that include at least two complete business cycles and a defined confidence threshold. Monitor daily health metrics without editing variables mid-test.

Real insight emerges after stability, not after excitement.

5. Analysis – Interpreting Beyond the Obvious

Results need context. “Variant B won” means little without segmentation. Analyse outcomes by device, channel, and audience type. Look at secondary metrics such as bounce rate or average order value.

Express improvement as lift rather than percentage difference to see absolute value. Document every finding, especially those that disprove assumptions. Knowledge compounds faster than wins.

6. Integration: Turning Results into Continuous Learning

Experiments reach full value only when insights feed future decisions. Store outcomes, learnings, and hypotheses in a central experimentation library. Share them in retros and planning sessions so new ideas build on proven evidence.

Tag each insight by theme: copy, design, offer, timing to create searchable intelligence. Over time, this practice transforms A/B testing into a consistent feedback engine for product, marketing, and design teams.

7. Reference Framework: A/B Testing Failure Points and Fixes

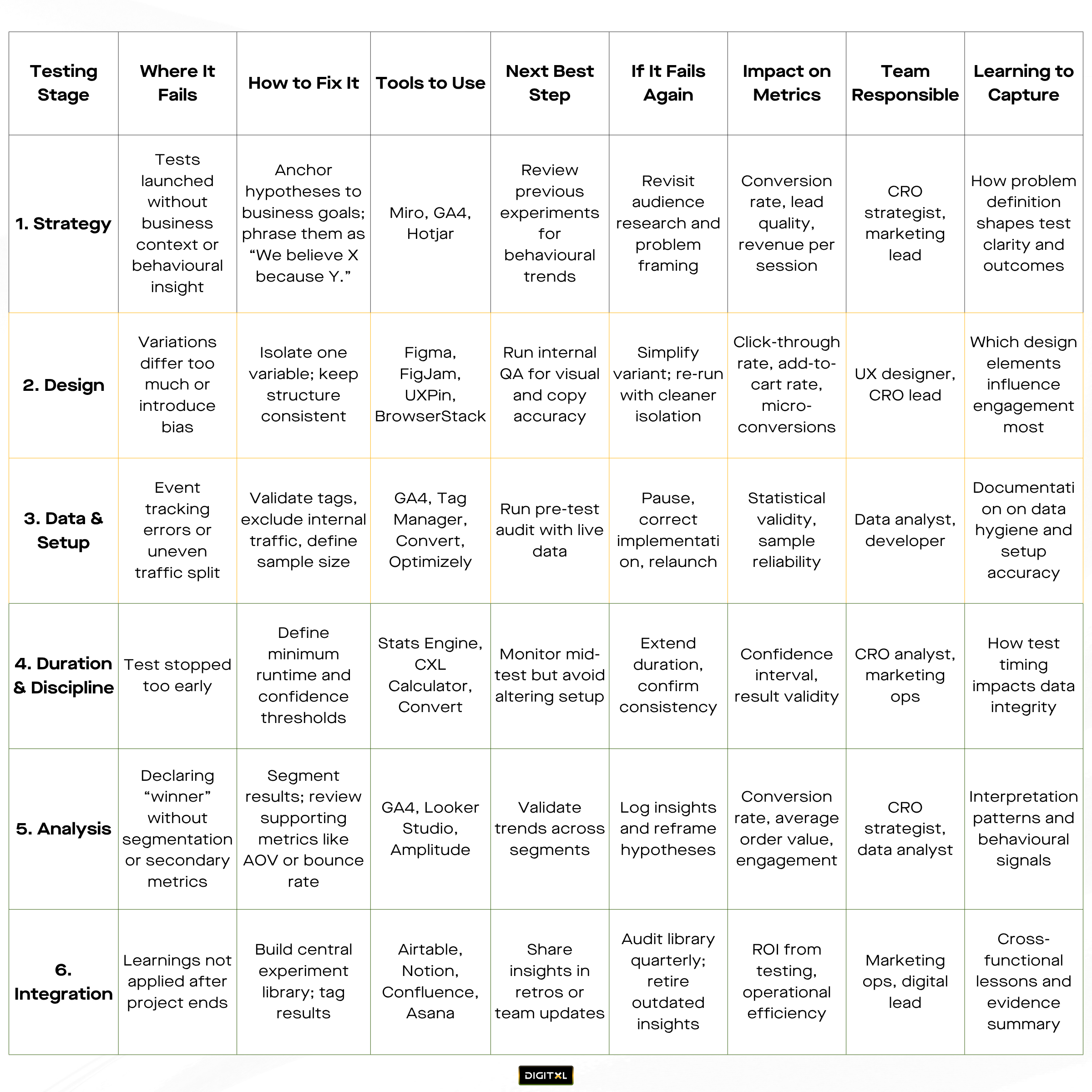

Each stage of testing introduces different points of risk and opportunity. The table below outlines how to identify, correct, and recover from common breakdowns across the full experimentation lifecycle.

It also maps the business impact, team accountability, and learnings that convert testing from a tactic into an operational system.

Testing success depends on alignment between people, process, and interpretation. When each stage is owned, measured, and documented, A/B testing evolves from campaign activity to a continuous learning engine that sharpens every marketing decision.

8. Conclusion

Reliable experimentation comes from structure and discipline. Each stage strategy, design, data, timing, analysis, and integration creates the conditions for truth.

At DIGITXL, our conversion rate optimisation consultants approach testing as an operating system for growth. Every experiment must inform the next, building a living record of how real users behave.

As a CRO agency in Australia, we see stronger performance when teams treat A/B testing as a method of understanding people rather than proving ideas. Clarity turns experiments into evidence. Evidence turns learning into growth.

9. Frequently Asked Questions (FAQs):

1. Why do most A/B tests fail, even when a CRO agency is involved?

Most A/B tests fail because they’re treated as one-off tactics, not part of a disciplined experimentation system. If your CRO agency isn’t grounding hypotheses in business goals, enforcing clean data, or documenting learnings, you’ll get “winners” that never translate into lasting revenue or conversion uplift.

2. How can a CRO agency Australia turn ad-hoc A/B tests into a structured CRO program?

A CRO agency in Australia can centralise all your tests into a single roadmap, prioritised by impact and effort, then standardise how hypotheses, metrics and runtime rules are defined. Over time, that turns scattered experiments into a repeatable CRO program where each test builds on previous insights instead of starting from zero.

3. What should a CRO agency Melbourne audit before we launch any A/B test?

A CRO agency Melbourne should first audit tracking accuracy, event naming, traffic splits and sample size assumptions, then review page design for bias (too many changes, unclear hierarchy, inconsistent states). They’ll also pressure-test the hypothesis and primary metric to ensure the test is actually capable of answering a meaningful business question.

4. When is the right time to bring in a CRO agency to own experimentation strategy?

You’re ready for a CRO agency when you have reliable traffic but flat conversions, or when internal teams are running tests without a clear framework, documentation or business linkage. At that point, giving a specialist agency ownership of experimentation strategy prevents wasted sprints and turns A/B testing into a core growth lever.

5. What if our A/B tests keep showing “no significant lift” – can a CRO agency fix that?

Consistent “no lift” results usually point to weak hypotheses, underpowered samples or noise in your data, not that optimisation “doesn’t work”. A strong CRO agency will recalibrate your research inputs, refine test design, tighten targeting and rebuild your experiment library so even losing tests generate insight that compounds into future wins.