- Ecommerce

- Martech

What is Experience Optimisation?

03 Jun 2025

Most teams who prioritise experience aren’t starting from zero. They’ve built working journeys. Tested pathways. Invested in UX. Launched personalisation streams. Aligned on performance metrics. And still, something isn’t quite holding.

- Conversion moves, but retention doesn’t.

- Uplift happens, but trust lags.

- Testing velocity rises, but backlog clarity weakens.

This is about scope, not execution..

Because experience doesn’t live entirely in one team’s line of sight. It lives in the grey — between what’s owned, what’s inherited, and what no one flags until it impacts someone downstream.

Experience is a capability, and the more platforms you run — Adobe, GA4, Tealium, Optimizely, Braze, — the more necessary that capability becomes.

1. Optimisation rarely starts with a clean brief

Few teams start experience work with a blank canvas. What they’re working with is usually a patchwork:

- A checkout rebuilt three times to accommodate promotions, fraud logic, and fulfilment rules

- Consent flows modelled to meet GDPR and Australian privacy standards, but not updated post-CDP rollout

- Personalisation layers that vary based on whether the user lands from Braze, paid search, or a referral

- Site experiences split across headless CMS logic and containerised components, with no unified handoff tracking

The question isn’t “what should we build?”

It’s “which behaviours are we unintentionally shaping — and where are they breaking the loop?” Experience work starts where system logic outpaces journey logic.

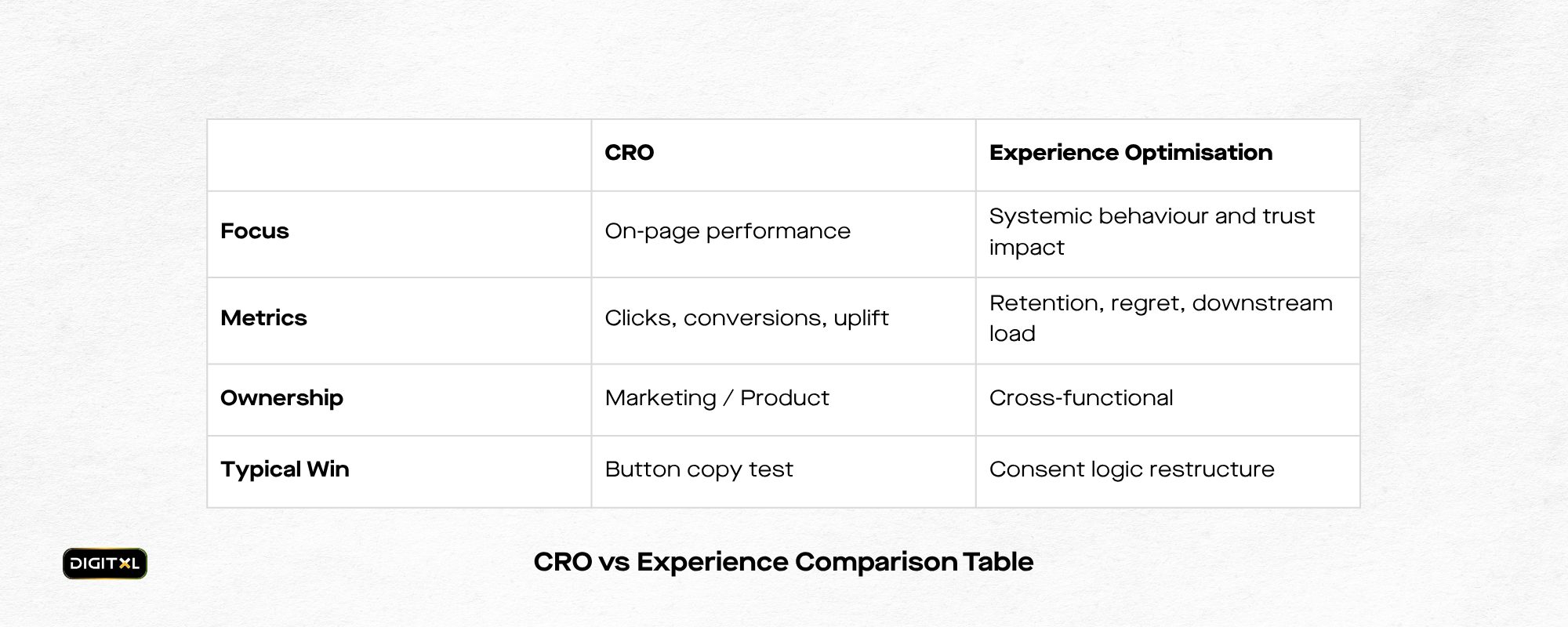

2. Testing coverage doesn’t guarantee experience integrity

It’s easy to assume that test velocity equals insight quality. But even mature testing programs can under-detect critical gaps in flow.

Some patterns we see repeatedly:

- Significant test wins that coincide with increased support tickets

- Campaign journeys where segmentation works, but routing rules delay fulfilment

- Login states that override context persistence (promo codes, prefilled fields, etc.)

- Funnel steps optimised in isolation, with different definitions of success across teams

What is User Experience (UX) Optimisation? It’s what holds after the test ends, when multiple systems like identity resolution, consent flags, content eligibility, fulfilment begin to intersect.

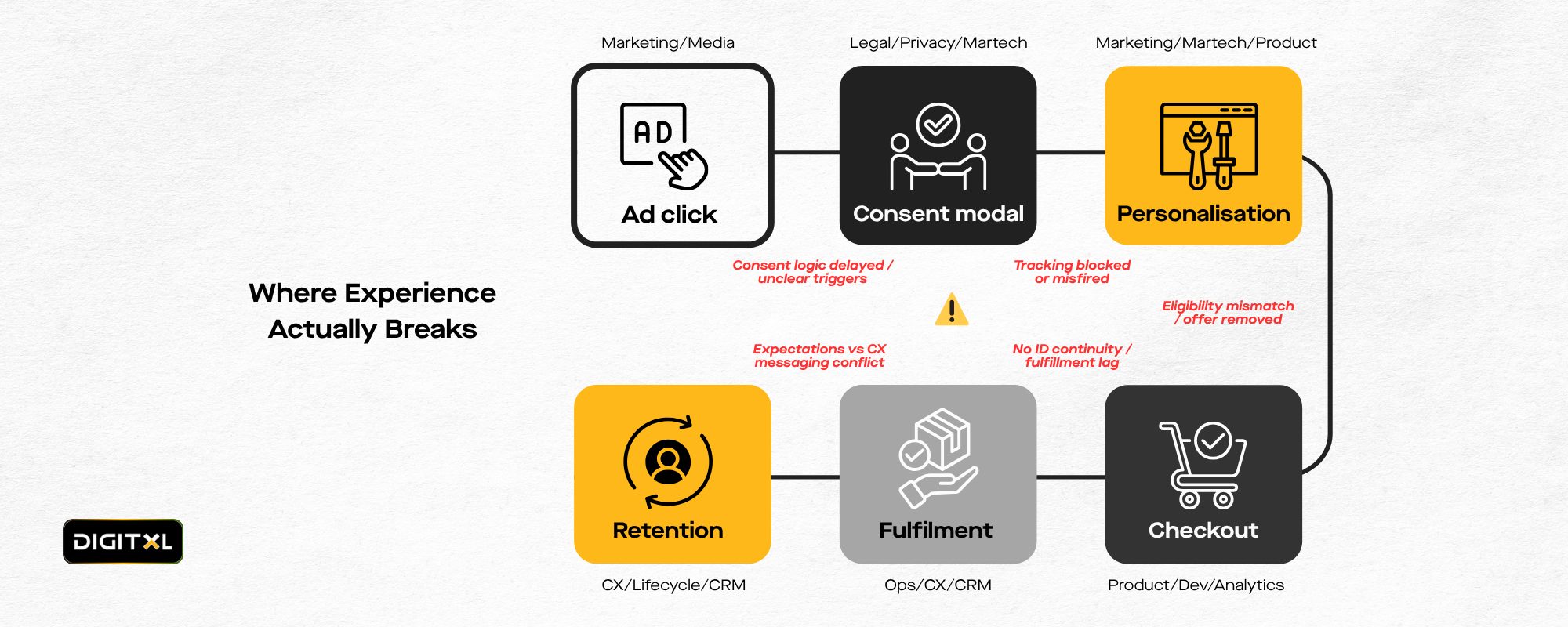

3. Most friction doesn’t start in UX. It starts in handovers.

Where things fall through:

- Consent policies are updated, but legacy journeys keep firing unapproved tags

- Personalisation conditions are valid in the CDP, but excluded in certain CMS pathways

- Experimentation tools apply variations that conflict with real-time pricing logic

- Cross-domain logins lose identifiers passed between cart and account

If no one owns the connective logic then the stuff between tags, tools, and templates and the experience feels less like a path, and more like a patch.

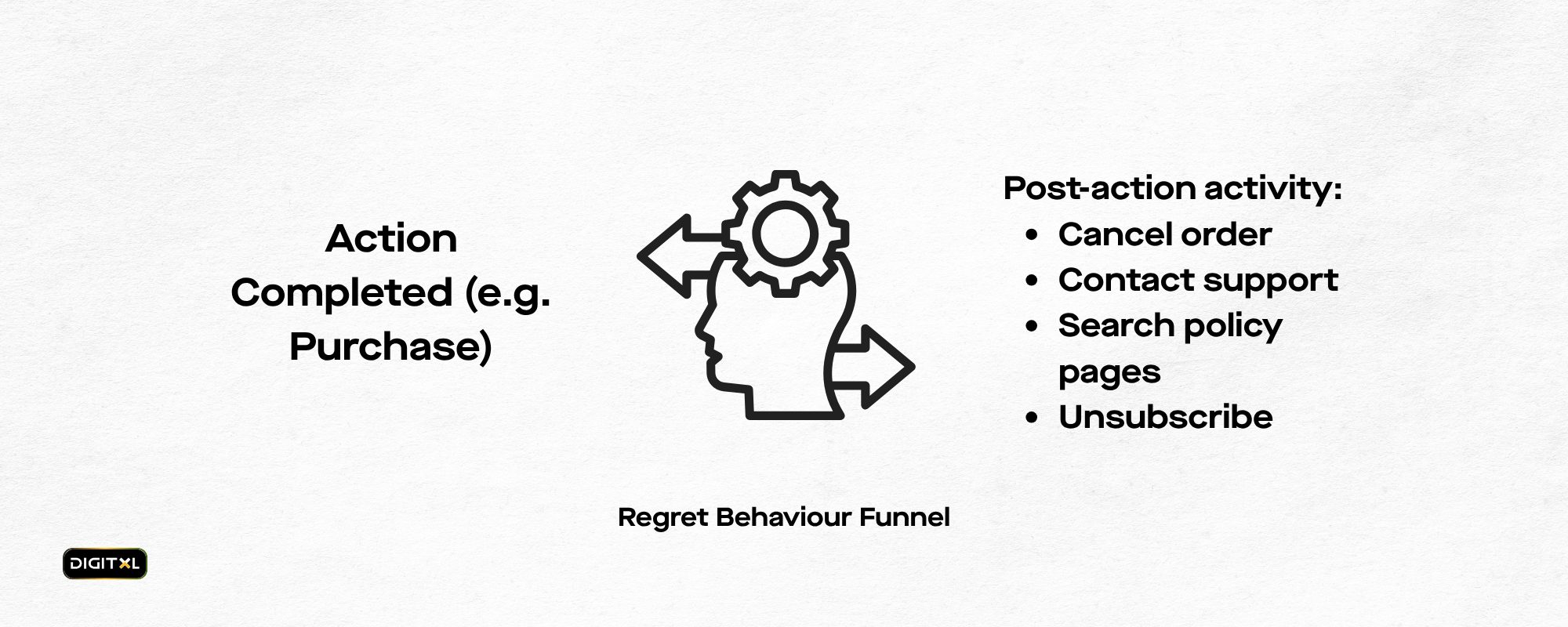

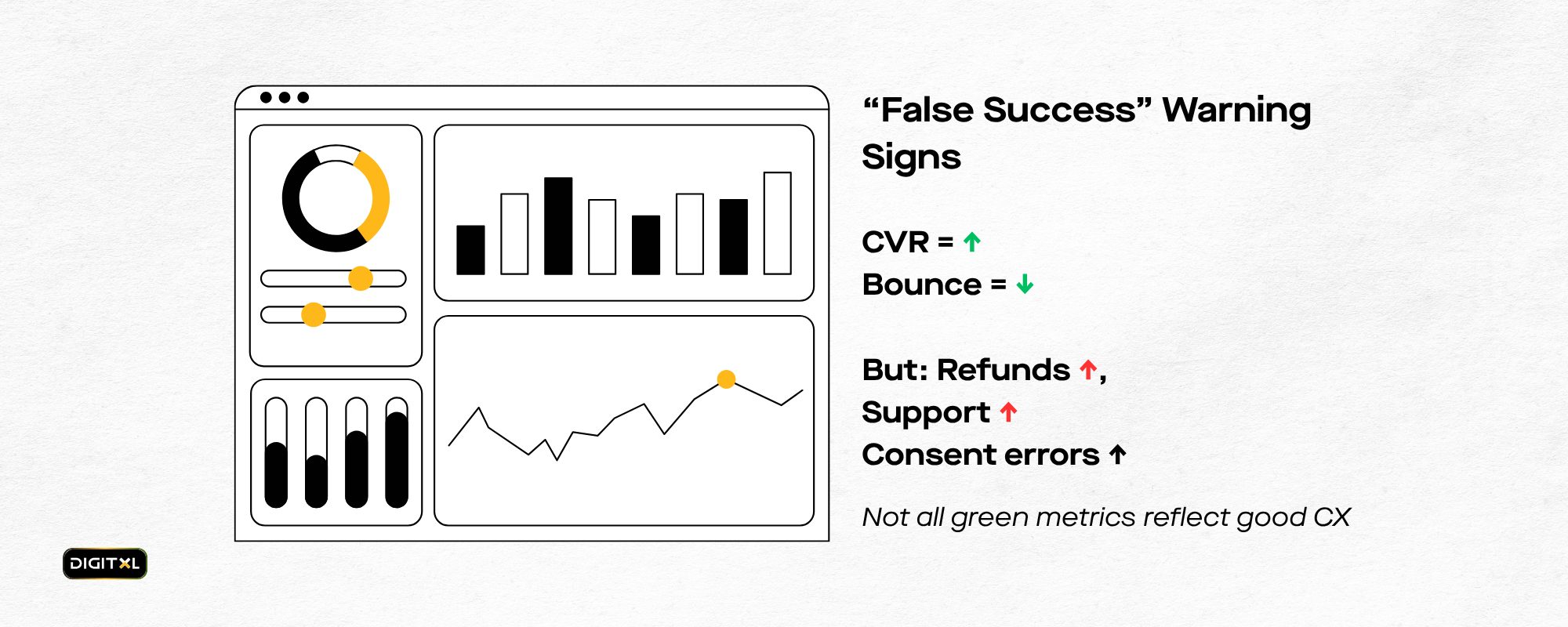

4. The numbers don’t always reflect the experience

Teams often rely on GA4, Adobe Analytics, or dashboard blends to track conversion performance. But experience signals often sit outside those tools entirely.

Examples:

- Regret behaviour: cancellations, returns, unsubscriptions shortly after action

- Clarification loops: CX tickets from users who already “converted”

- Trust lag: repeated visits to policy or support content after key interactions

- Consent volatility: users toggling preferences or resubmitting forms repeatedly

These aren’t friction points in the traditional sense but signs that the interaction succeeded functionally but failed contextually.

Standard reporting layers won’t show that. They’ll show completions.

Experience optimisation asks: was that completion clear, durable, and aligned — or just technically valid?

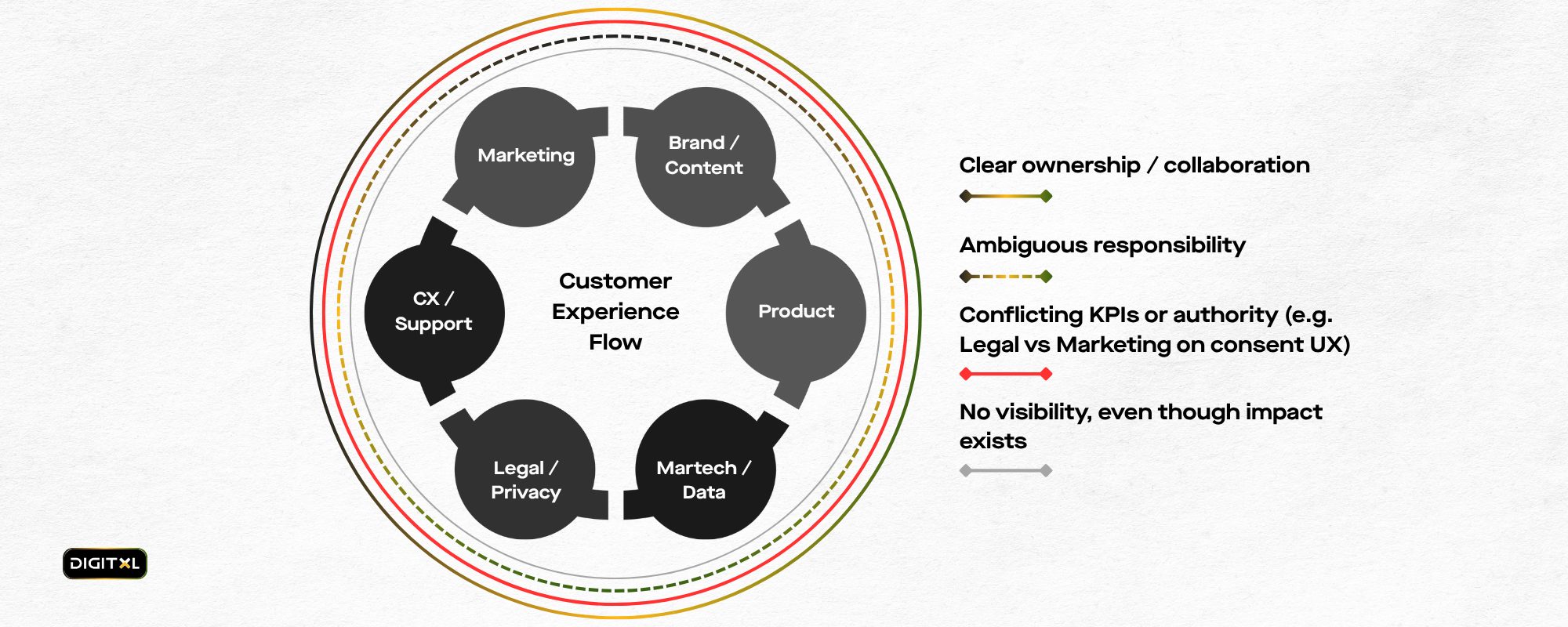

5. Different teams read the same journey differently

In complex stacks, one experience often runs through five different toolsets and ten different internal definitions of “working.”

- Product sees adoption

- Analytics sees a flat drop-off rate

- CX sees a spike in “where’s my order?” tickets

- CRM sees unsubscribes

- Privacy sees cookie inconsistencies

Each team responds based on their lens. None of them are wrong.

But the experience doesn’t sit neatly in any single report. It shows up where the views collide — and when the fixes compete.

Optimisation here means creating room for shared diagnostics. Not just “what happened,” but “where do we start tracing it?”

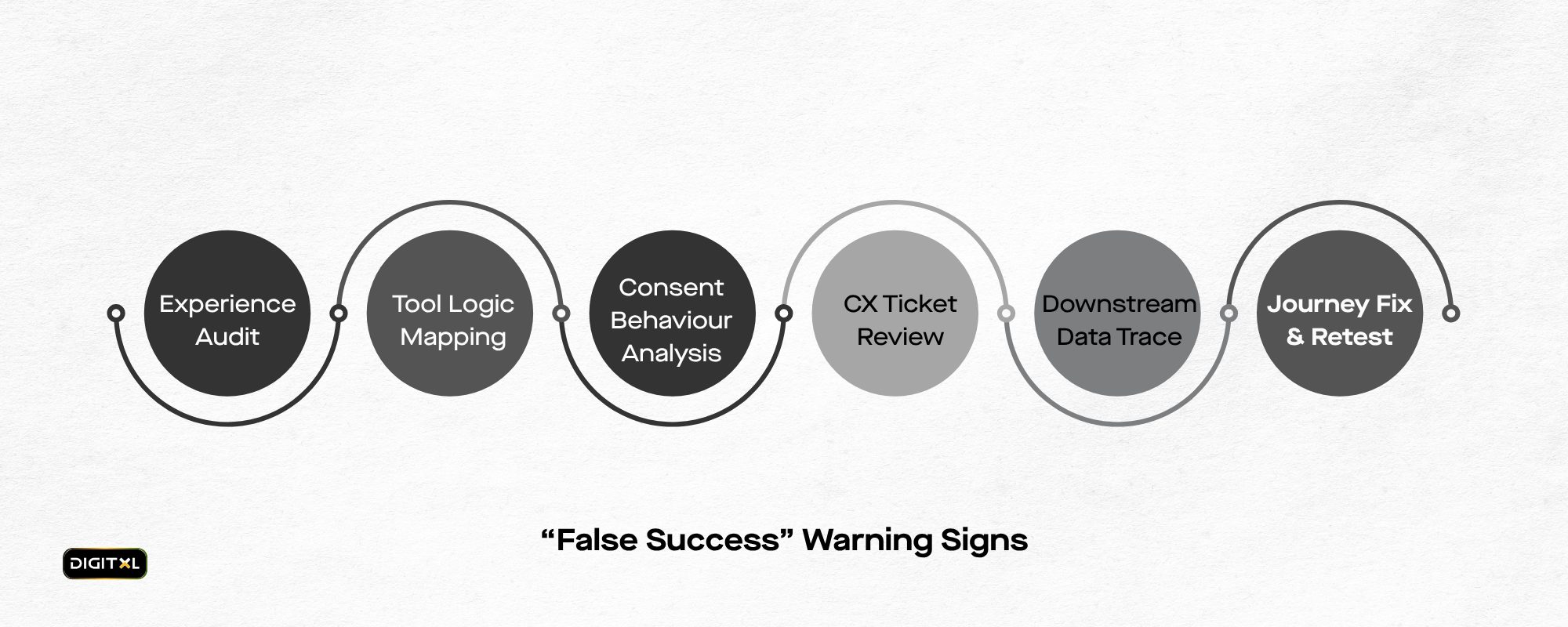

6. Most experience work isn’t net-new. It’s resolution-focused.

Some of the most effective optimisation initiatives we’ve supported weren’t about redesigns or major rebuilds. They were about unblocking.

- Clarifying how consent flags carry through login events

- Rewriting one content module that was consistently causing exit loops

- Aligning campaign source tags with backend routing logic

- Re-mapping fallback states for invalid combinations of personalisation and eligibility

Small moves, structurally placed.

They didn’t uplift CVR overnight but they prevented data loss, confusion, and repeat support effort.

7. In high-performing teams, experience becomes infrastructure

There’s a point where experience stops being a project — and starts being operationalised.

In those teams, we see:

- Tag QA includes not just firing and sequence, but consent validation

- Test briefs require downstream scenario mapping before launch

- Personalisation is condition-checked against legal content flags

- Design reviews include policy consistency and ID handover clarity

- Success metrics blend behavioural outcomes with operational stability

These teams are sequencing more cleanly. Experience stops being a last-mile concern, and starts becoming a systems habit.

8. TALK STRATEGY

Experience optimisation isn’t a fix to apply after conversion rate slows. Let’s talk strategy, and let our experts analyse and audit your website. We’ll take it from there!

P.S: It’s Free!